In this lesson, you will explore how bias can appear in algorithms. Algorithms are the systems that power many online tools, such as search engines, social media feeds, and artificial intelligence applications. Understanding bias in these systems is important for promoting fairness and responsibility in the digital world. You will learn through reading, activities, and reflections, completing everything independently. By the end of the lesson, you will be able to identify bias and consider its effects on society.

In this lesson, you will explore how bias can appear in algorithms. Algorithms are the systems that power many online tools, such as search engines, social media feeds, and artificial intelligence applications. Understanding bias in these systems is important for promoting fairness and responsibility in the digital world. You will learn through reading, activities, and reflections, completing everything independently. By the end of the lesson, you will be able to identify bias and consider its effects on society.

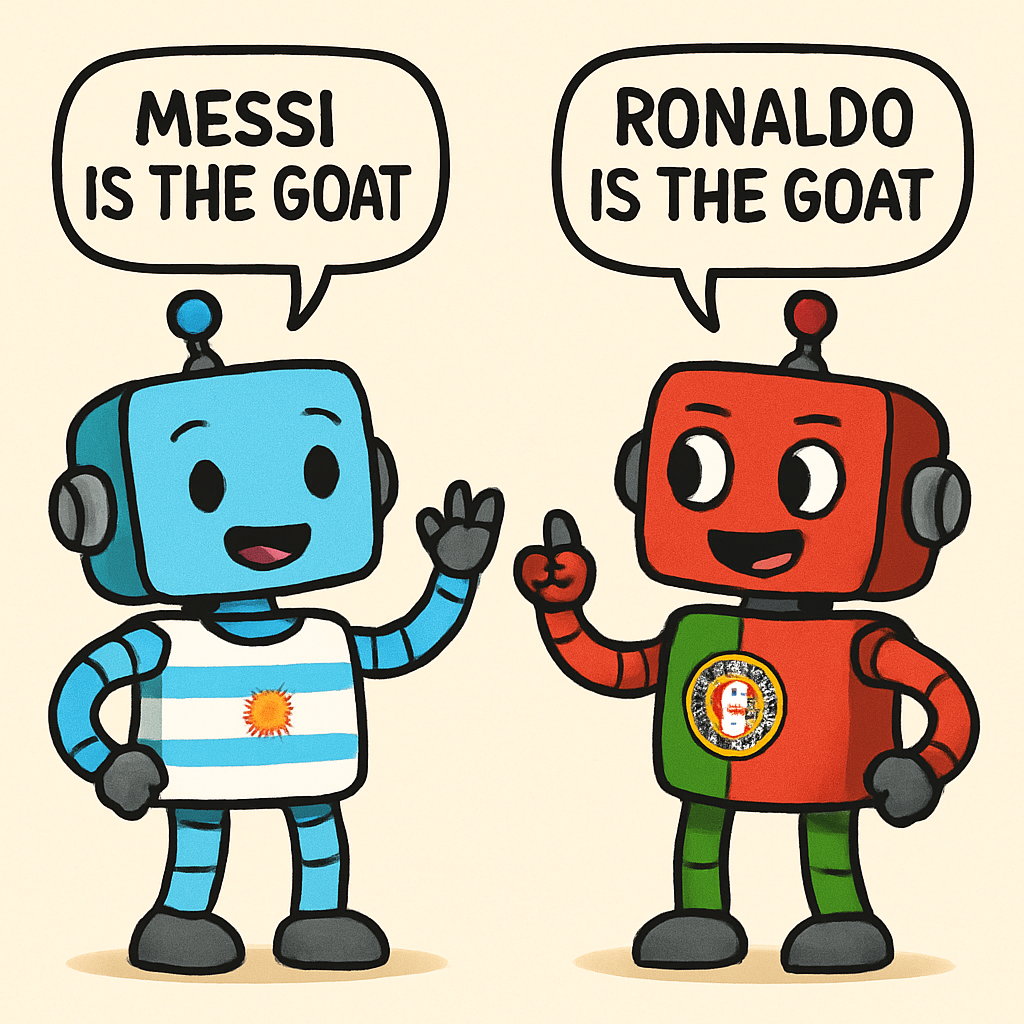

Algorithms function like sets of instructions that determine what content you see online, including recommendations for videos or search results. However, they can sometimes be unfair due to bias, which involves unjust preferences based on factors such as race, gender, or location. This lesson will guide you in recognising bias, understanding its causes, and reflecting on its broader implications.

Understanding Algorithmic Bias

Understanding Algorithmic BiasAlgorithmic bias occurs when computer systems produce unfair decisions or results due to issues in their data or design. For instance, consider a search engine that consistently prioritises results favouring one group over another; this is an example of bias in action.

Bias in algorithms can arise from several sources:

It is essential to recognise that algorithms underpin many technologies we use daily, from social media to recommendation systems. When bias is present, it can result in real-world unfairness, affecting opportunities and perceptions in society.

Now, let us examine some everyday examples of bias in algorithms. These instances occur in tools and platforms that you might use regularly, such as search engines and social media. By exploring these, you will develop a better understanding of how bias can manifest in your daily digital experiences and learn to recognise it more effectively.

Now, let us examine some everyday examples of bias in algorithms. These instances occur in tools and platforms that you might use regularly, such as search engines and social media. By exploring these, you will develop a better understanding of how bias can manifest in your daily digital experiences and learn to recognise it more effectively.

On services like YouTube or Netflix, algorithms curate content based on your location, viewing history, and other data. If you are from a particular region or demographic, the recommendations might predominantly feature content that aligns with common stereotypes associated with that group. This can create an 'echo chamber' effect, where you are exposed to a narrow range of perspectives, thereby restricting your access to diverse ideas and cultures.

Consider what happens when you enter a query into a search engine. For example, typing 'best jobs for women' might yield suggestions focused on stereotypical roles, such as nursing or teaching. In contrast, searching for 'best jobs for men' could prioritise results like engineering or technology positions. This pattern reinforces gender stereotypes and can influence perceptions of career opportunities, limiting how individuals view their potential paths.

Facial recognition features in apps and security systems sometimes fail to accurately identify individuals with darker skin tones. This issue arises because the algorithms are often trained on datasets that predominantly include images of people with lighter skin. As a result, errors can occur, leading to unfair outcomes in areas like law enforcement or access control, which disproportionately affect certain ethnic groups.

Social media platforms use algorithms to display advertisements tailored to your profile. For instance, ads might assume interests based on age, gender, or location in ways that perpetuate biases, such as showing beauty products primarily to females or sports equipment to males, regardless of actual preferences. This can reinforce societal norms and exclude users from seeing a broader array of options.

In this activity, you will conduct a practical comparison of results from two different online platforms to identify potential instances of algorithmic bias. This exercise will help you observe how algorithms may present information differently and encourage you to think critically about fairness in digital systems.

In this activity, you will conduct a practical comparison of results from two different online platforms to identify potential instances of algorithmic bias. This exercise will help you observe how algorithms may present information differently and encourage you to think critically about fairness in digital systems.

Select two search engines, such as Google and Bing, or two content platforms like YouTube and TikTok. Perform the same search query on both, for example, 'Teen fashion trends', 'best jobs for women' or 'news about space exploration'. Observe and compare the results to detect any differences that might indicate bias.

To deepen your analysis, think about factors that could influence these differences, such as the platform's data sources, user base, or algorithmic design.

Google Results (Top 5): 1. Nursing, 2. Teaching, 3. Human Resources, 4. Marketing, 5. Social Work. Suggestions often focus on roles traditionally associated with women.

Bing Results (Top 5): 1. Nursing, 2. Administrative Assistant, 3. Teaching, 4. Healthcare Administrator, 5. Counselling. Similar emphasis on stereotypical roles, but with slight variations like more administrative suggestions.

Differences and Potential Biases: Both platforms prioritise jobs like nursing and teaching, which reinforce gender stereotypes. Google includes more 'creative' roles like marketing, while Bing leans towards administrative ones. This might stem from training data reflecting historical job patterns, leading to limited diversity in suggestions and potentially discouraging exploration of non-traditional careers. It affects fairness by perpetuating societal norms and limiting opportunities.

Why This Matters: Such biases can influence how you perceive career options, making it important to question and compare results across platforms.

In this step, you will examine real-world examples of bias in algorithms through detailed case studies. These examples illustrate how algorithmic bias can occur and its potential consequences. Read the information carefully and reflect on the key points to deepen your understanding.

In this step, you will examine real-world examples of bias in algorithms through detailed case studies. These examples illustrate how algorithmic bias can occur and its potential consequences. Read the information carefully and reflect on the key points to deepen your understanding.

In 2020, researchers and users discovered that Twitter's automated image cropping feature tended to favour lighter-skinned faces when generating photo previews. This meant that in group photos, the algorithm often selected and displayed portions of the image featuring individuals with lighter skin tones, while cropping out those with darker skin tones. The issue stemmed from the training data used to develop the algorithm, which lacked sufficient diversity and representation of various skin tones. As a result, the system perpetuated racial bias, leading to feelings of exclusion among affected users. This example highlights broader concerns, such as in law enforcement applications where similar facial recognition technologies have contributed to errors, including wrongful arrests that disproportionately impact minority groups.

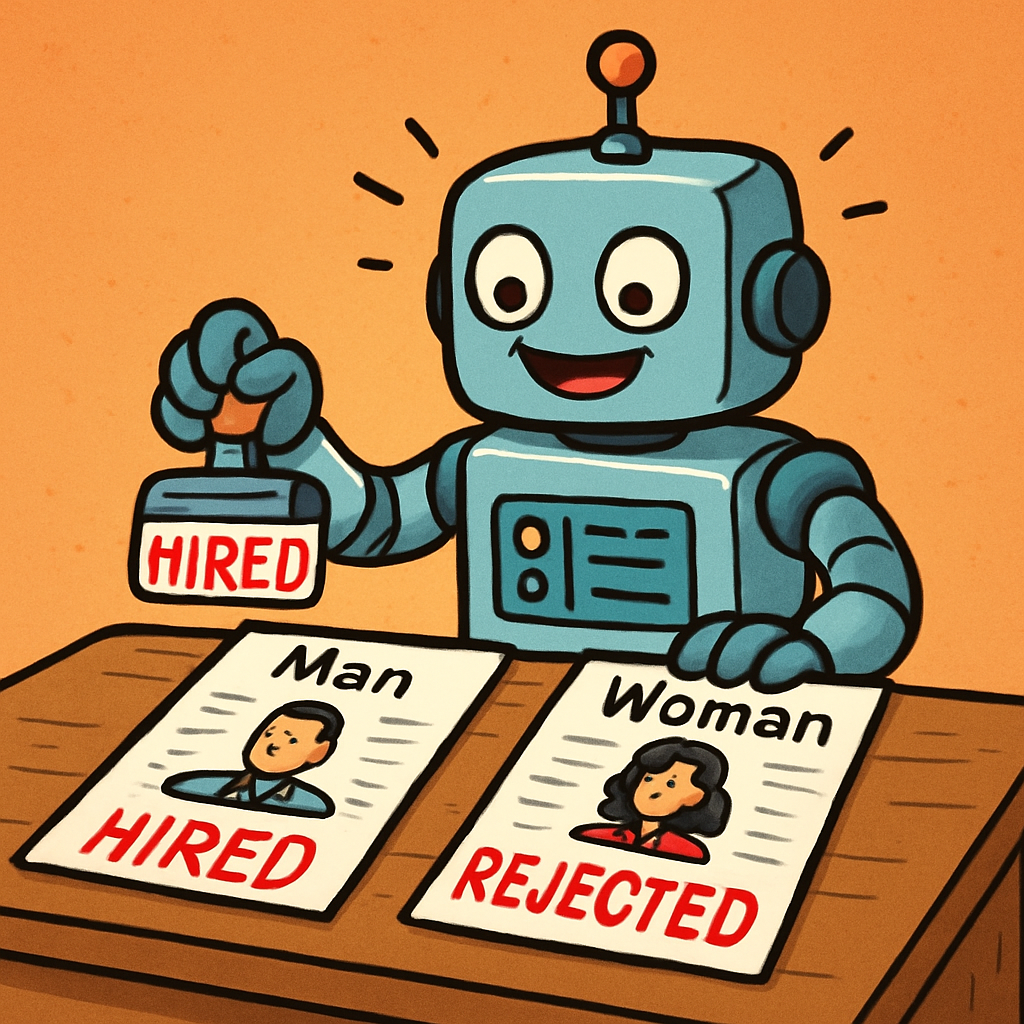

Another notable instance involved Amazon's recruitment tool, designed to screen job applications and identify top candidates. The algorithm was trained on a dataset primarily consisting of resumes from male applicants, reflecting the company's historical hiring patterns in technical roles. Consequently, the system learned to favour male candidates by downgrading resumes that included terms associated with women, such as mentions of women's colleges or all-female organisations. This led to qualified female applicants being overlooked, reinforcing gender inequality in the workplace. Amazon eventually discontinued the tool after recognising these flaws.

These cases demonstrate common causes of algorithmic bias, including imbalanced training data and unintended human influences in system design. They also show the real-world harm that can arise, affecting individuals' opportunities, safety, and sense of fairness in society.